Blog Intro

Welcome to this new blog series where I’ll be covering the development of VisTag. Now what that is and how it will work will be explained below. I’ll be posting development update as they come up. You can find updates to the project on my GitHub

Stated Goal

The goal of VisTag is to eliminate hours of manual classification and organization work to make finding the right assets much faster for game development teams.

What is VisTag

VisTag is a tool that automatically tags and organizes 3D game assets based on how they look. It works by taking images of your 3D models and using a Machine Learning model to classify them.

Here’s how it works:

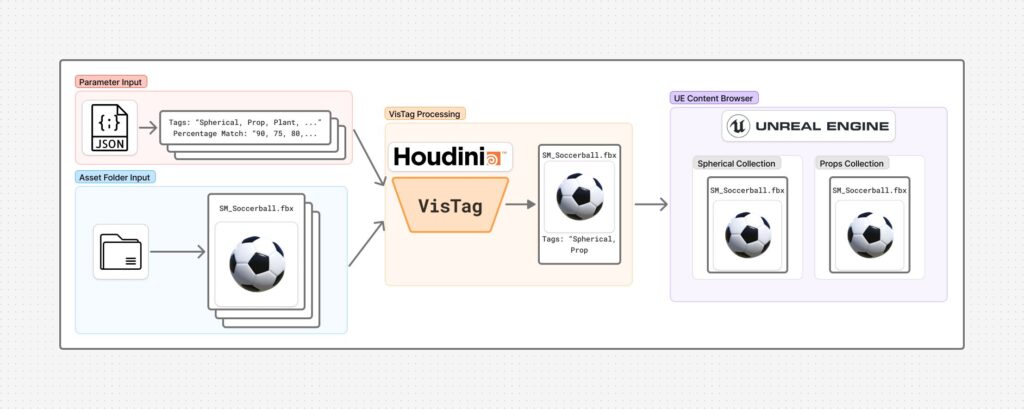

1.) The user inputs a folder of 3D assets and a parameter file

2.) Images are taken of the asset and then passed to the model along with the parameters to be processed

3.) The model assigns user-inputted tags like “weapon,” “vehicle,” or “character” based on % confidence scores

4.) These tags become metadata that travels with your asset

5.) When imported into Unreal Engine, assets are automatically organized into collections based on their tags

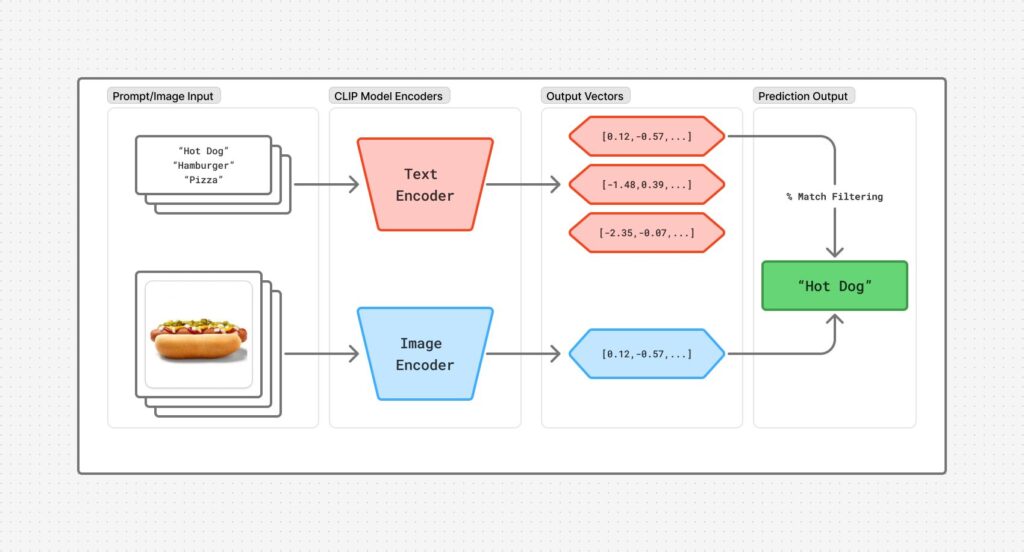

CLIP Model

The model that I am using for this project is OpenAI’s CLIP (Contrastive Language-Image Pre-Training). It is doing what is called Zero-Shot Image Classification, meaning it can classify images into categories that they haven’t been trained on. I opted to use this technique as it allows for the classification of 3D models without having to train a new model based on those assets

Current Development

First, Why Houdini?

Houdini is already really good at loading in models from a file structure and performing operations on them. It also makes it easier to visualize the pipeline steps, which makes locating/debugging issues more straightforward than a strictly Python approach.

I’m using a version of the pre-trained CLIP model in the .onnx file format. This allows me to use the ONNX Inference node in Houdini, which (in-theory) makes feeding data in/out of the model easier.

Where I am at

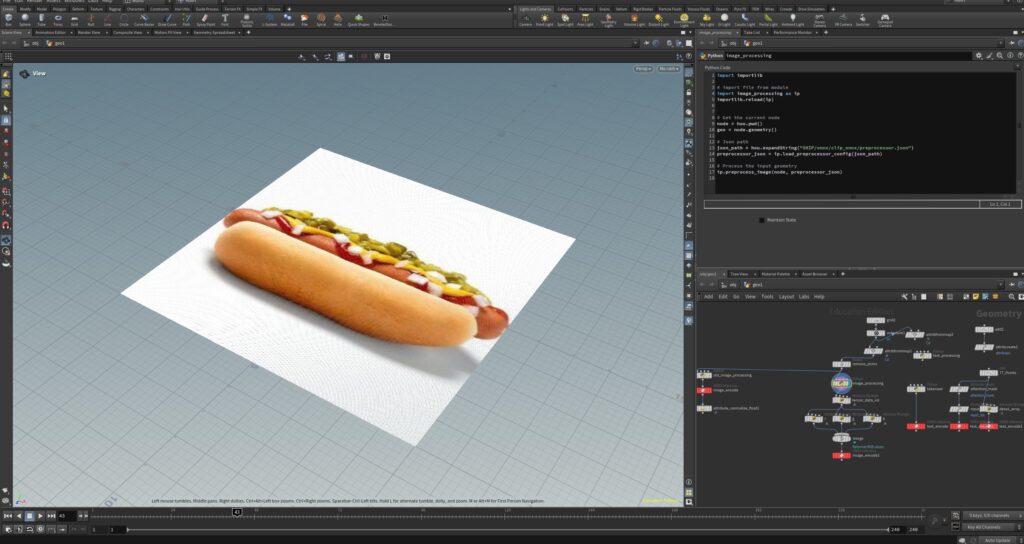

At the moment I have the Image Processing model setup and behaving as expected: input an image -> output values for comparison.

I’ve also developed a system to capture images of the loaded 3D asset regardless of it’s dimensions and location within 3D space.

Current Problems

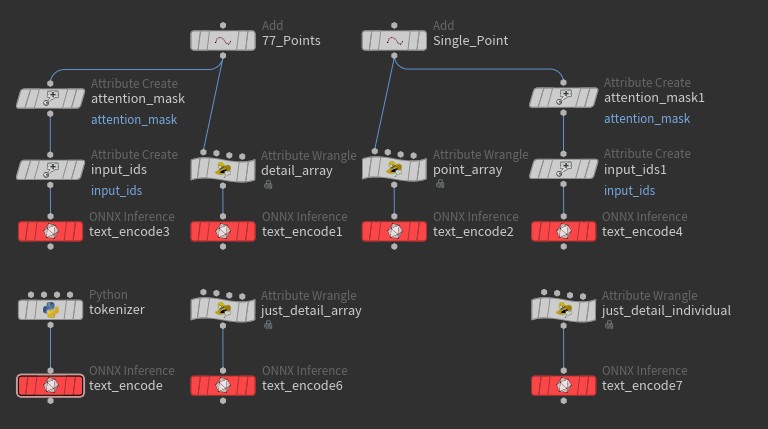

On that whole .onnx files making it easier thing! Well that has been the case for the Image Processing side of the model, but hasn’t been working as smoothly with the Text Processing side.

To understand the problem you need to know a bit more about the pipeline. First I get a string input of the text that I would like to use for the tags. Next I tokenize the text using a ClipTokenizer from the HuggingFace transformers Python library I am given two outputs: input_ids and an attention_mask. The input_ids are the tokenized version of the text that can be read by the model and the attention_mask is a bitmask that tells the model what part of the input to look at.

The problem is that the format that the Text Processing .onnx model intakes is unclear. I’ve tried several different approached, but it the format that it will accept is still unknown to me.

Future Development

Text Processing

I’m going to continue experimenting with different input formats. But if all else fails I’m going to attempt loading and feeding inputs into the the .onnx models using Python

Once I have the Text Processing outputting the data I need it to, I can then develop the comparison algorithm. Which will take the outputs from both the Image and Text Processing models and determine a percent comparison. From that point, once I have the tag matches, then I can assign them as metadata

Asset to Image

I need to develop a system to convert the view from the asset camera into the image input. From my slight research into this, there seems to be a way to do this in Houdini

TOPs Network

Once I get the VisTag system developed I will create a TOPs network that will allow me to automate the process over all assets within a folder. The one problem that I forsee with this is the exporting of tagged meshes, as I have had some trouble with this in the past. Although this may be an operation that I can do in Python

Metadata to Unreal Collections

I wasn’t aware of the collections system in Unreal until a colleague mentioned it when I was describing this project to them. I’m familiar with the system of attaching Houdini attributes that can be read in Unreal, so I’m going to assume that there is a way to assign metadata that can be read as a collection in UE